AMD’s Ryzen AI 300 collection of cellular processors beats Intel’s cellular competitors handily at native giant language mannequin (LLM) efficiency, in response to latest in-house testing by AMD. A brand new weblog submit from the corporate’s group weblog outlines the assessments AMD carried out to beat Crew Blue in AI efficiency, and find out how to take advantage of the favored LLM program LM Studio for any customers.

Most of AMD’s assessments had been carried out in LM Studio, a desktop app for downloading and internet hosting LLMs domestically. The software program, constructed on the llama.cpp code library, permits for CPU and/or GPU acceleration to energy LLMs, and affords different management over the performance of the fashions.

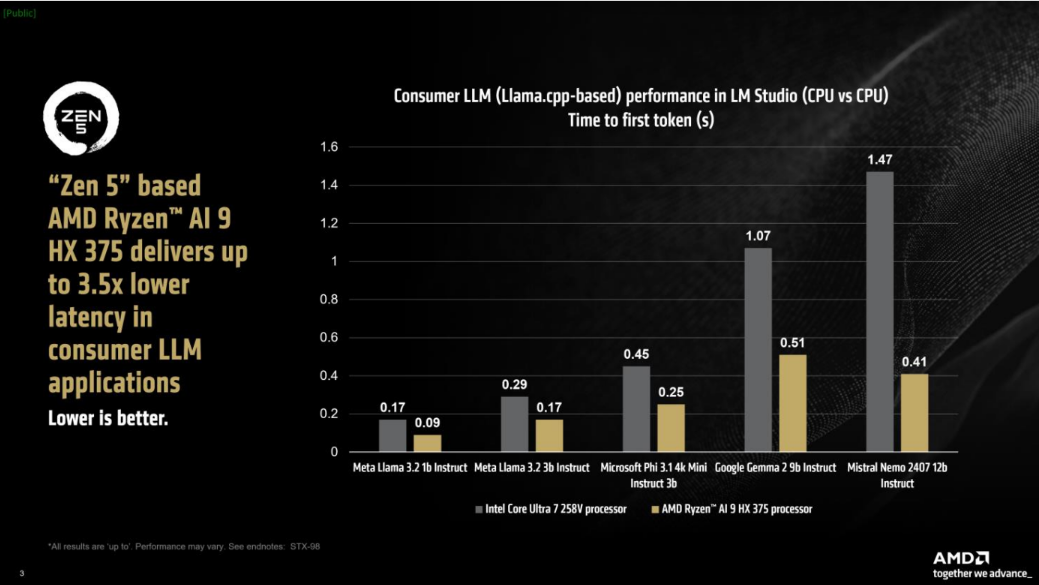

Utilizing the 1b and 3b variants of Meta’s Llama 3.2, Microsoft Phi 3.1 4k Mini Instruct 3b, Google‘s Gemma 2 9b, and Mistral’s Nemo 2407 12b fashions, AMD examined laptops powered by AMD’s flagship Ryzen AI 9 HX 375 in opposition to Intel’s midrange Core Extremely 7 258V. The pair of laptops had been examined in opposition to one another measuring pace in tokens per second and acceleration within the time it took to generate the primary token, which roughly match to phrases printed on-screen per second and the buffer time between when a immediate is submitted and when the LLM begins output.

As seen within the graphs above, the Ryzen AI 9 HX 375 reveals off higher efficiency than the Core Extremely 7 258V throughout all 5 examined LLMs, in each pace and time to start out outputting textual content. At its most dominant, AMD’s chip represents 27% higher speeds than Intel’s. It’s unknown what laptops had been used for the above assessments, however AMD was fast to say that the examined AMD laptop computer was working slower RAM than the Intel machine—7500 MT/s vs. 8533 MT/s—when sooner RAM usually corresponds to higher LLM efficiency.

It ought to be famous that Intel’s Extremely 7 258V processor just isn’t precisely on a good enjoying discipline in opposition to the HX 375; the 258V sits in the course of Intel’s 200-series SKUs, with a max turbo pace of 4.8 GHz versus the HX 375’s 5.1 GHz. AMD’s option to pit its flagship Strix Level chip in opposition to Intel’s medium-spec chip reads as a bit unfair, so take the 27% enchancment claims with that in thoughts.

AMD additionally confirmed off LM Studio’s GPU acceleration options in assessments displaying off the HX 375 in opposition to itself. Whereas the devoted NPU in Ryzen AI 300-series laptops is supposed to be the driving pressure in AI duties, on-demand program-level AI duties are extra susceptible to make use of the iGPU. AMD’s assessments with GPU acceleration utilizing the Vulkan API in LM Studio so closely favored the HX 375 that AMD didn’t embrace Intel’s efficiency numbers with GPU acceleration turned on. With GPU acceleration on, the Ryzen AI 9 HX 375 noticed as much as 20% sooner tk/s than when it ran duties with out GPU acceleration.

With a lot present press round computer systems primarily based on AI efficiency, distributors are wanting to show that AI issues to the top person. Apps like LM Studio or Intel’s AI Playground do their greatest to supply a user-friendly and foolproof method to harness the newest 1 billion+ iteration LLMs for private use. Whether or not giant language fashions and getting the very best out of your laptop for LLM use issues to most customers is one other story.